Kafka is a highly scalable messaging platform that provides a method for distributing information through a series of messages organised by a specified topic. With Tungsten Replicator the incoming stream of data from the upstream replicator is converted, on a row by row basis, into a JSON document that contains the row information. A new message is created for each row, even from multiple-row transactions.

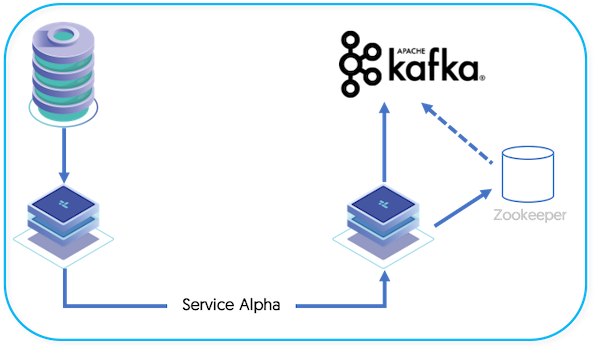

The deployment of Tungsten Replicator to Kafka service is slightly different. There are two parts to the process:

Service Alpha on the Extractor, extracts the information from the MySQL binary log into THL.

Service Alpha on the Applier, reads the information from the remote replicator as THL, and applies that to Kafka.

With the Kafka applier, information is extracted from the source database using the row-format, column names and primary keys are identified, and translated to a JSON format, and then embedded into a larger Kafka message. The topic used is either composed from the schema name or can be configured to use an explicit topic type, and the generated information included in the Kafka message can include the source schema, table, and commit time information.

The transfer operates as follows:

Data is extracted from MySQL using the standard extractor, reading the row change data from the binlog.

The Section 10.4.5, “ColumnName Filter” filter is used to extract column name information from the database. This enables the row-change information to be tagged with the corresponding column information. The data changes, and corresponding row names, are stored in the THL.

The Section 10.4.32, “PrimaryKey Filter” filter is used to add primary key information to row-based replication data.

The THL information is then applied to Kafka using the Kafka applier.

There are some additional considerations when applying to Kafka that should be taken into account:

Because Kafka is a message queue and not a database, traditional transactional semantics are not supported. This means that although the data will be applied to Kafka as a message, there is no guarantee of transactional consistency. By default the applier will ensure that the message has been correctly received by the Kafka service, it is the responsibility of the Kafka environment and configuration to ensure delivery. The replicator.applier.dbms.zookeeperString can be used to ensure acknowledgements are received from the Kafka service.

One message is sent for each row of source information in each transaction. For example, if 20 rows have been inserted or updated in a single transaction, then 20 separate Kafka messages will be generated.

A separate message is broadcast for each operation, and includes the operation type. A single message will be broadcast for each row for each operation. So if 20 rows are delete, 20 messages are generated, each with the operation type.

If replication fails in the middle of a large transaction, and the replicator goes

OFFLINE, when the replicator goes online it may resend rows and messages.

The two replication services can operate on the same machine, (See Section 5.3, “Deploying Multiple Replicators on a Single Host”) or they can be installed on two different machines.